Understanding Algorithmic Efficiency

Introduction

Algorithmic efficiency refers to how effectively an algorithm performs, particularly regarding time taken to complete and the memory space it requires. It’s often discussed in terms of time complexity and space complexity, which help us to understand an algorithm’s performance with respect to the size of its input data (denoted as ‘n’).

Time Complexity

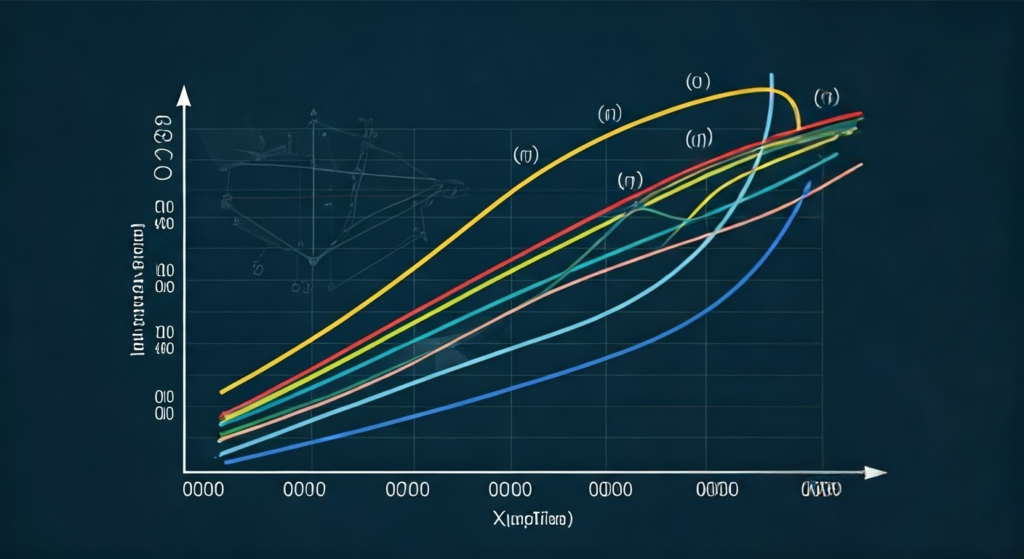

Time complexity measures the number of operations an algorithm performs relative to the input size. It’s usually expressed using Big O notation, which provides an upper bound on the time growth rate. Here are some common time complexity classes:

- Constant Time: O(1) – The execution time is the same, regardless of the input size.

- Logarithmic Time: O(log n) – The execution time grows logarithmically with input size. Binary search is an example of this.

- Linear Time: O(n) – The execution time grows linearly with the input size. Linear search exemplifies linear time complexity.

- Quadratic Time: O(n^2) – The execution time grows proportionally to the square of the input size. Bubble sort often has quadratic time complexity.

- Exponential Time: O(2^n) – The execution time doubles with each addition to the input data set.

Space Complexity

Space complexity measures the total amount of memory space that an algorithm uses relative to the input size. Like time complexity, it’s also expressed using Big O notation. For instance:

- Constant Space: O(1) – The amount of memory used doesn’t change with the input size.

- Linear Space: O(n) – The amount of memory used grows linearly with the input size.

Understanding these complexities helps developers write more efficient algorithms, crucial for large data sets or applications where performance is a significant concern.

Analyzing Algorithm Efficiency

To analyze an algorithm’s efficiency, we often use the best, worst, and average-case scenarios:

- Best-case: The complexity in the most favorable scenario.

- Worst-case: The complexity in the least favorable scenario.

- Average-case: The complexity in the average scenario.

It’s important to consider all these scenarios when evaluating an algorithm’s performance, as they provide a complete picture of its efficiency.

Algorithmic Efficiency with Examples

Introduction to Time and Space Complexity

Algorithmic efficiency is crucial for understanding how algorithms perform as data scales up. We often express this efficiency in terms of time complexity (how the execution time of an algorithm changes with input size) and space complexity (how much memory an algorithm uses during execution).

Time Complexity Examples

Example 1: Constant Time Complexity O(1)

Accessing a specific element in an array is an example of constant time complexity. No matter the size of the array, accessing an element takes the same amount of time.

# Accessing an element by index

def access_element(arr, index):

return arr[index]

# Regardless of array size, accessing is O(1)

arr = [1, 2, 3, 4, 5]

print(access_element(arr, 3))

Example 2: Linear Time Complexity O(n)

Finding the maximum value in an unsorted list exemplifies linear time complexity since we must check each element in the list.

# Finding the maximum in a list

def find_maximum(arr):

max_value = arr[0]

for num in arr:

if num > max_value:

max_value = num

return max_value

# Time complexity is O(n)

arr = [5, 3, 8, 1, 2]

print(find_maximum(arr))

Example 3: Quadratic Time Complexity O(n^2)

Performing a bubble sort on a list demonstrates quadratic time complexity, as each element is compared with every other element.

# Bubble sort algorithm

def bubble_sort(arr):

n = len(arr)

for i in range(n):

for j in range(0, n-i-1):

if arr[j] > arr[j+1]:

arr[j], arr[j+1] = arr[j+1], arr[j]

return arr

# Sorting has a time complexity of O(n^2)

arr = [2, 5, 1, 8, 4]

print(bubble_sort(arr))

Space Complexity Examples

Example 1: Constant Space Complexity O(1)

Calculating the sum of two numbers uses constant space, because it stores the result in a single variable, regardless of the size of the inputs.

# Sum of two numbers

def sum_two_numbers(a, b):

return a + b

# Uses O(1) space

print(sum_two_numbers(5, 7))

Example 2: Linear Space Complexity O(n)

Creating a copy of a list requires linear space, since the size of the storage needed grows linearly with the size of the input list.

# Copying a list

def copy_list(arr):

copied_list = arr[:]

return copied_list

# Copying the list has a space complexity of O(n)

arr = [1, 2, 3, 4, 5]

print(copy_list(arr))

Through these examples, we see that time complexity is not necessarily related to space complexity; an algorithm can be fast but use a lot of memory, or be slow but very memory efficient. Understanding these trade-offs is essential when designing algorithms.

Time Complexity Questions and Answers

- What is time complexity?

Time complexity is a measure of the amount of time an algorithm takes to complete as a function of the length of the input.

- Why is time complexity important?

Time complexity is important because it helps us predict the scalability of an algorithm and how it will perform as the size of the input data grows.

- What does O(n) time complexity mean?

O(n) time complexity means that the execution time of an algorithm grows linearly with the size of the input.

- How does O(log n) time complexity differ from O(n)?

O(log n) time complexity implies that the algorithm’s execution time grows logarithmically with the input size, which is more efficient than O(n) for large data sets.

- Can you provide an example of an algorithm with O(1) time complexity?

An algorithm that returns the first element of a list has O(1) time complexity, as it takes constant time regardless of the list size.

- What type of algorithm has O(n^2) time complexity?

Algorithms with nested loops over the input data, such as bubble sort, typically have O(n^2) time complexity.

- Is O(n + m) the same as O(n)?

No, O(n + m) is not the same as O(n) if ‘m’ is not a constant and can grow independently of ‘n’. It represents scenarios where an algorithm processes two different inputs of sizes ‘n’ and ‘m’.

- What does the Big O notation typically describe?

Big O notation typically describes the worst-case scenario of an algorithm’s time complexity, providing an upper bound on the time an algorithm takes.

- What is a real-world example of an O(n log n) algorithm?

Merge sort and heapsort are examples of O(n log n) algorithms, often used for sorting large datasets in the real world.

- What is the time complexity of a binary search?

The time complexity of a binary search is O(log n) because it splits the search interval in half each time.